I recently completed a migration from my old docker-machine-based infrastructure (so 2016!) to Digital Ocean’s hosted kubernetes service. The bulk of the work was completed in a single evening. It went almost seamlessly, but not so seamlessly that I wasn’t able to learn a few things. The goal of this writeup is not to serve as comprehensive documentation, but hopefully you’ll take away some ideas about how to get your own setup rolling.

(Oh, before we start, I’d like to disclaim that all the links to Digital Ocean in this article are blessed with my referral code.)

But why tho?

Kubernetes (k8s) is a hot technology right now. Its basic value proposition is this:

- You stand up a kubernetes cluster, which remains agnostic about what’s running on it.

- Then, thanks to the magic of containers, you can do all your deployments exclusively via config files. Everything in kubernetes is a yaml-formatted manifest that describes what you want, and k8s handles the work of making it happen.

Some of the things this gives you for free are zero-downtime deployments (rollover logic is built-in), and automatic restarts (if your container exits, kubernetes will bring it back automatically). It’s also really easy to manage a bunch of yaml files, compared to other options; just keep your manifests in git and you can redeploy on any kubernetes cluster.

We use k8s extensively at Telmediq, so I’ve had the chance to get familiar with it. For Later for Reddit, I was excited to make the move for a few reasons:

- The existing docker-machine deployment was getting a little long in the tooth; one of my nodes was having disk-space issues that I wasn’t looking forward to resolving, and I wanted to upgrade from ubuntu 16.04 before maintanence upgrades fell off.

- Kubernetes enables zero-downtime deployments. Downtime is bad. Therefore, this is good.

- Automatic restarting means that my deepest infrastructure fear – the scheduling service going down and posts not going out – is handled, or at least depends on more than just my ability to write software that never crashes.

- My deployment workflow left much to be desired, in a few ways that kubernetes solves.

- I have big plans for some of kubernetes’ features (cronjobs, ingress management).

- My preview/staging environment was neglected, as tends to happen. K8s makes managing this more pleasant.

- I just find it easier to do essential maintenance tasks when the promise of working with something new and shiny exists.

Later for Reddit’s architecture, circa a few weeks ago

Prior to the move, and excluding the postgres database, Later ran in five containers:

- The API Service, which powers the dashboard.

- The Scheduler Service, which actually does the work of figuring out what’s due and posting it to Reddit.

- The Jobs service, which handles a few odds and ends that aren’t so important as the scheduler.

- An nginx Container, which served both the dashboard webpack bundle (https://dashboard.laterforreddit.com) and the front-page/marketing site (https://laterforreddit.com).

- An off-the-shelf Redis instance, used for caching (easy to run in docker, if you don’t care about persistence).

For production, I ran Later for Reddit on a pair of docker machines. One machine was dedicated to the scheduler, and the rest ran on another. This arrangement was mostly to help prevent accidental disruption of the scheduler service, either via resource competition or some other deployment snafu.

My deployment situation was as follows:

- Use

docker-machine envto connect to the appropriate server - Run a make command that builds and runs the image on the specified machine

This had the benefit of being simple, but I had to build in checks for my Makefile so that it wouldn’t deploy when my shell was pointing at the wrong docker machine. I’ve never been really satisfied with this setup. So come along with me and let’s do something about it!

Provisioning and configuring Kubernetes

I don’t think I can really improve on Digital Ocean’s documentation here. I more-or-less just followed these.

I also installed the NGINX Ingress Controller via DigitalOcean’s marketplace.

After you can kubectl get pods successfully, I also recommend getting K9S. It’s a hell of a lot easier than using kubectl to navigate your cluster, and I think it’s really instructive being able to watch pods get created and destroyed in real-time.

Registering my images

One of the requirements for my dream deployment is an internet-available container registry. I opted to use DO’s container registry; it may be in early access, but it’s worked out for me.

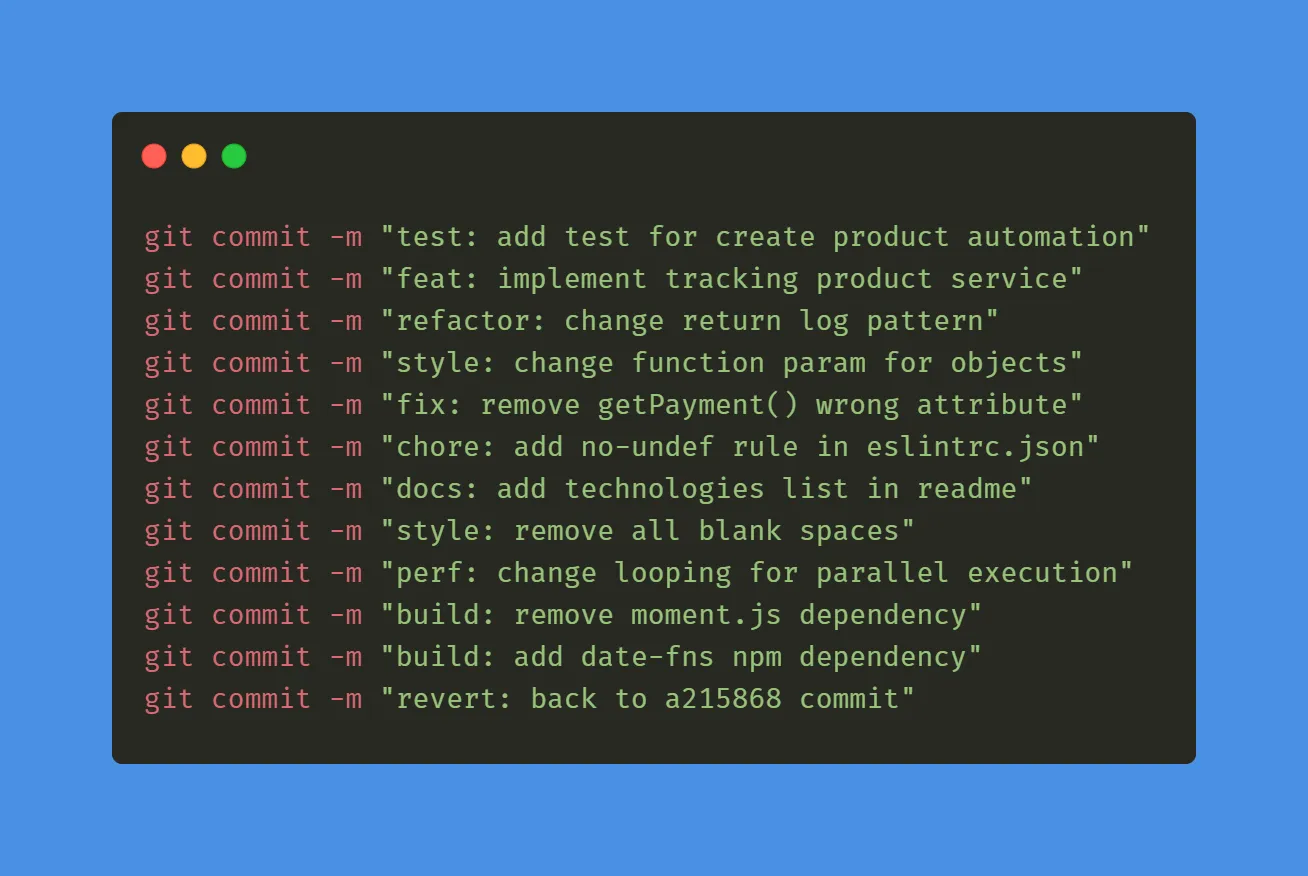

I made up a new Makefile to manage building, tagging, and pushing my images. It goes a little something like this:

# Generic image build command

build-image:

docker build -t $(image) $(context)

docker tag $(image) $(REGISTRY_URL)/$(image):latest

docker push $(REGISTRY_URL)/$(image):latest

# Specific image build command

build-api-image:

$(MAKE) image=$(API_IMAGE) context=./docker/api build-image

# ...

These commands will build the image (with the specified name), tag it as the latest, and push that up to the remote registry.

K8S Basics

Kubernetes offers a plentitude of… well, they’re conceptual abstractions with particular properties suited to different workflows. You know, services, pods, replicasets, deployments, that sort of thing. I’ll call them things from here on.

A recurring problem with k8s tutorials and docs in general is that the sheer volume of concepts (“things”) involved is overwhelming. I’m going to try to keep to the smallest set of concepts that I can. The relevant things that I made use of for this deployment are:

- ConfigMaps, which for my purposes exist to provide environment variables to my running containers.

- Secrets, which I use exactly like configmaps except kubernetes keeps them secret-er. Store your database host and port in config and the password in secrets.

- Pods, which are essentially individual container processes. These are where your code runs. Pods are named things like

production-rl-api-6827cd893-aep2k, and are generally managed by things called Controllers (as opposed to being manually created). - Deployments are a sort of Controller. You can define a deployment declaring something like “run this many copies of this docker image with these environment variables”, and Kubernetes will make sure that those pods exist (there’s an in-between thing here I left out called ReplicaSet, which is another type of Controller created by the Deployment and which actually creates the pods, but you can live without this information). Deployments have names like

production-rl-api. - Services, which expose logical network names (e.g. hostnames) and connect those up to pods, via selectors. Services are only required for deployments/replicasets/things which require incoming network access. In my deployment, these are also called e.g.

production-rl-api. - Ingresses, which are useful for controlling things like which path/hostname points at which Service.

To create any of the above, you first create a YAML config file, then apply it with kubectl: kubectl apply -f my-config.yaml. This will load the config into kubernetes and create the associated objects (things).

Preparing for Kubernetes

Thanks to the ingress, I no longer have to roll my own nginx configuration. This made it easy to split my prior nginx container into separate Dashboard and Homepage containers. With that done the configuration for my new deployment is as follows:

rl-config: A ConfigMap containing the common configuration for the whole applicationrl-secrets: A Secret containing some more sensitive configrl-api: A Deployment and a Service for the APIrl-dashboard: A Deployment and a Service for the Dashboardrl-marketing: A Deployment and a Service for the Homepagerl-redis: A Deployment and a Service for Redisrl-scheduler: A Deployment for the Schedulerrl-jobs: A Deployment for the Jobs servicerl-ingress: An Ingress to manage that all.

Notice that rl-scheduler and rl-jobs don’t have an associated service. This is because nothing ever needs to talk to them; they start up and do their thing all by themselves, no inputs required.

Deployment Details

Let’s get right in here a little bit. Here are some annotated examples for the config and secrets:

apiVersion: v1

kind: ConfigMap

metadata:

name: rl-config

# Just pop in key-value pairs. They don't have to be all caps, but it's usual for environment variables.

data:

DATABASE_HOST: mycooldatabase.example.com

# ...

# --- separates individual configurations in yaml files

---

apiVersion: v1

kind: Secret

metadata:

name: rl-secrets

type: Opaque

# These are just like in the configmap, but the values are base64-encoded to make

# them less susceptable to passing glances.

data:

DATABASE_PASSWORD: aHVudGVyMg==

# ...

(A simple way to generate base64-encoded values from a command line is: echo -n "myvalue" | base64)

You could put that in two files, or in one file. Then, just kubectl apply -f the-file.yaml and voila! Kubernetes knows all your secrets (and config).

Services and deployments work the same way. Here are the annotated manifests for the rl-api service and deployment:

kind: Service

apiVersion: v1

metadata:

name: rl-api

spec:

# The `selector` option is how the service knows which pods it should forward traffic to

selector:

app: rl-api

# The `ports` option lets you map/remap ports. With this configuration, the

# service will accept traffic on port 80 and forward it to the pods on port 8080

ports:

- name: http

protocol: TCP

port: 80

targetPort: 8080

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: rl-api

spec:

# This deployment will run two pods. When restarting or updating, it will

# gracefully roll them over

replicas: 2

# On a deployment, `selector` is used so that k8s can tell, later on, which

# pods belong to this deployment.

# It *must* match the `labels` defined below.

selector:

matchLabels:

app: rl-api

# The `template` is a template for a pod. That is to say, pods created by this

# deployment will have this configuration.

template:

metadata:

# Labels are the targets for Selectors. The pods created by this

# deployment will have these labels, and so the service will be able

# to route traffic to them.

labels:

app: rl-api

spec:

containers:

- name: rl-api

# This is the docker image that the pods will run. `latest` is

# counter-recommended, but I think it's fine to start with.

image: route.to/rl-api:latest

imagePullPolicy: Always

# This defines where the pod will get its environment variables from;

# in this case, the config files mentioned earlier.

envFrom:

- secretRef:

name: rl-secrets

- configMapRef:

name: rl-config

This configuration is a complete (except for the config and image, get your own) definition of a working deployment and service. You could kubectl apply -f rl-api.yaml this file and have two pods running on your own kubernetes instance right away! Of course, to get the most out of kubernetes, you might want to add some resource limits, perhaps some readiness or liveness checks.

All of the other components follow this pattern, so I’ll spare you those details. Finally bringing it all together is the ingress:

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

name: rl-ingress

annotations:

kubernetes.io/ingress.class: nginx

spec:

rules:

# Marketing gets the homepage

- host: laterforreddit.com

http:

paths:

- backend:

serviceName: production-rl-marketing

servicePort: 80

# Dashboard redirects certain routes to rl-api and the rest to rl-dashboard

- host: dashboard.laterforreddit.com

http:

paths:

- path: /auth

backend:

serviceName: production-rl-api

servicePort: 80

- path: /graphql

backend:

serviceName: production-rl-api

servicePort: 80

# No path -- catch-all route

- backend:

serviceName: production-rl-dashboard

servicePort: 80

Jobs

Another separate container exists to manage and run database migrations for Later. I define this config as a Job. A Job is more-or-less just like a Deployment, except it expects its associated containers to run once and complete, instead of being long-running.

apiVersion: batch/v1

kind: Job

metadata:

name: rl-migrate

spec:

# This will cause the job to destroy itself after completing, saving me the effort.

ttlSecondsAfterFinished: 60

# This `template` is exactly the same as what appears in the Deployment config above

template:

spec:

containers:

- name: rl-migrate

image: route.to/migrate-img

imagePullPolicy: Always

command: ["run-migrations"]

envFrom:

- secretRef:

name: rl-secrets

- configMapRef:

name: rl-config

restartPolicy: Never

A kubectl apply -f job-migrate.yaml will kick off this job.

Outcome

This migration turned out to be a great success. I had a production-ready cluster the first night, completed the switchover and decommissioned the old servers inside of a work week, and things have been running great.

To deploy an update has become safer than before; I build the image, push it up to the repo, then run kubectl rollout restart deployment/<the-svc>. Kubernetes gracefully rolls over the pods, and restarts them with the updated image. No downtime (except for the jobs which don’t matter), no fuss.

I did run into a few hiccups:

- My initial dashboard service was a LoadBalancer when it should’ve been a ClusterIP type. This caused DO to provision a new load balancer for this service, and exposed the service publically. Not an issue, just a redundant resource.

- Without a proper CI/CD setup, it’s inconvenient to generate unique tags for each image and then apply this tag to the deployments. So instead, my pods just look for the

releasetag, which I overwrite to update. This occasionally causes some annoyance as I wait for caching in the registry/k8s to dissipate. I guess I’ll have to get that rolling at some point (if you have recommendations, I want them!) - When adding a staging environment, I had an issue with pod traffic getting crossed over. Turns out I needed to add more labels to my prod pods and services so they went to the right spot. A little dose of kustomization and a redeploy took care of that (lmk if you want to hear about my kustomize situation).

But, all in all, I’m very pleased with the new setup (and myself). Digital Ocean has done a fantastic job making Kubernetes accessible; if you were on the fence, I would encourage you to give it a try. Worst case, you have to pay for a few pods for a few days, until you decide it isn’t for you.

Leave a comment