Moore’s Law has been an essential principle in the evolution of IT over the decades. In simple terms, it predicts that the data on the processors will double every two years as development increases.

However, what is perhaps lesser known is a trend in the data center space. Despite a 6-fold increase in data processing since 2010, data center energy consumption only increased by 6% in 2018, according to Masanet et al, 2020. How was this possible, and does it show the expansion of sustainability in the future?

Where does the data come from?

To put this development into context, we first need to understand where the increase in data processing came from.

With the new technological devices and media involvement, global data production has gone from estimates of 2 zettabytes in 2010 to 41 zettabytes in 2019. According to IDC research, it is estimated that the global data load will increase to 175 zettabytes by 2025.

The pandemic effect is one of the reasons, as there has been an increase in the use of messages and social media in this period: users in Latin America spend more time on social networks, with an average of more than 3.5 hours a day.

Likewise, a separate study shows that 71% of Middle East respondents reported that their use of WhatsApp and other messaging apps has increased since the pandemic hit.

What impact does all this data have?

The data gravity concept was developed to understand the impact of this data explosion. Coined by engineer David McRory, the term refers to the tendency of a data hoard to draw applications and services to it, precipitating further hoarding, which can lead to data immobilization as well as underutilization. Big, fast data can get stuck, reducing your capacity. Only low-latency, high-bandwidth services, combined with new data architectures, can combat this growing phenomenon.

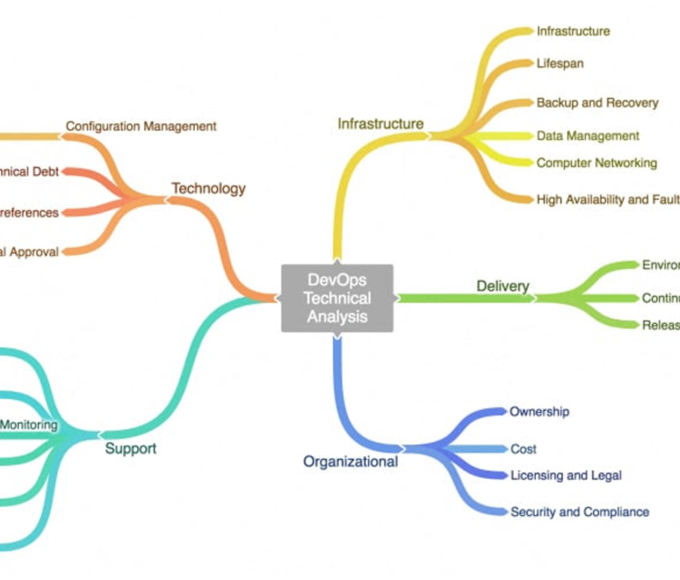

What technological developments made this possible?

Multiple technological developments may be responsible for this data explosion, dealing with only minimal increases in energy consumption, from improvements in processor design and manufacturing, to power supply and storage units. Also, the migration of data loads infrastructure work from on-premises to the cloud.

There are already technology companies committed to sustainable business for decades. This meant a renewed focus on efficiency in product development and operation. Significant gains were shown in power and cooling efficiency with UPS systems and modular power supplies. Lithium-ion batteries increase efficiency and extend operating life, lessening the environmental impact of reducing raw materials and facilitating “power swapping,” Power module addition or replacement can be performed with zero downtime, increasing operator and service personnel protection.

Advances in refrigeration, such as flow control through rack, row, and capsule containment systems, liquid cooling, and intelligent software control, ensure that the gains from pure data processing are satisfying and match.

By ensuring that every link in the power chain, from the power grid to the rack, is as efficient, intelligent, and instrumented as possible, we provide the foundation for rapid development in computing, networking, and storage to keep running every day.

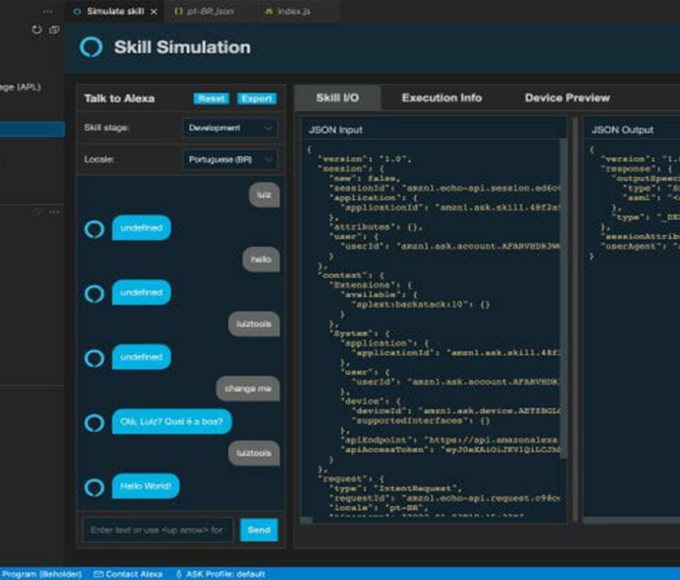

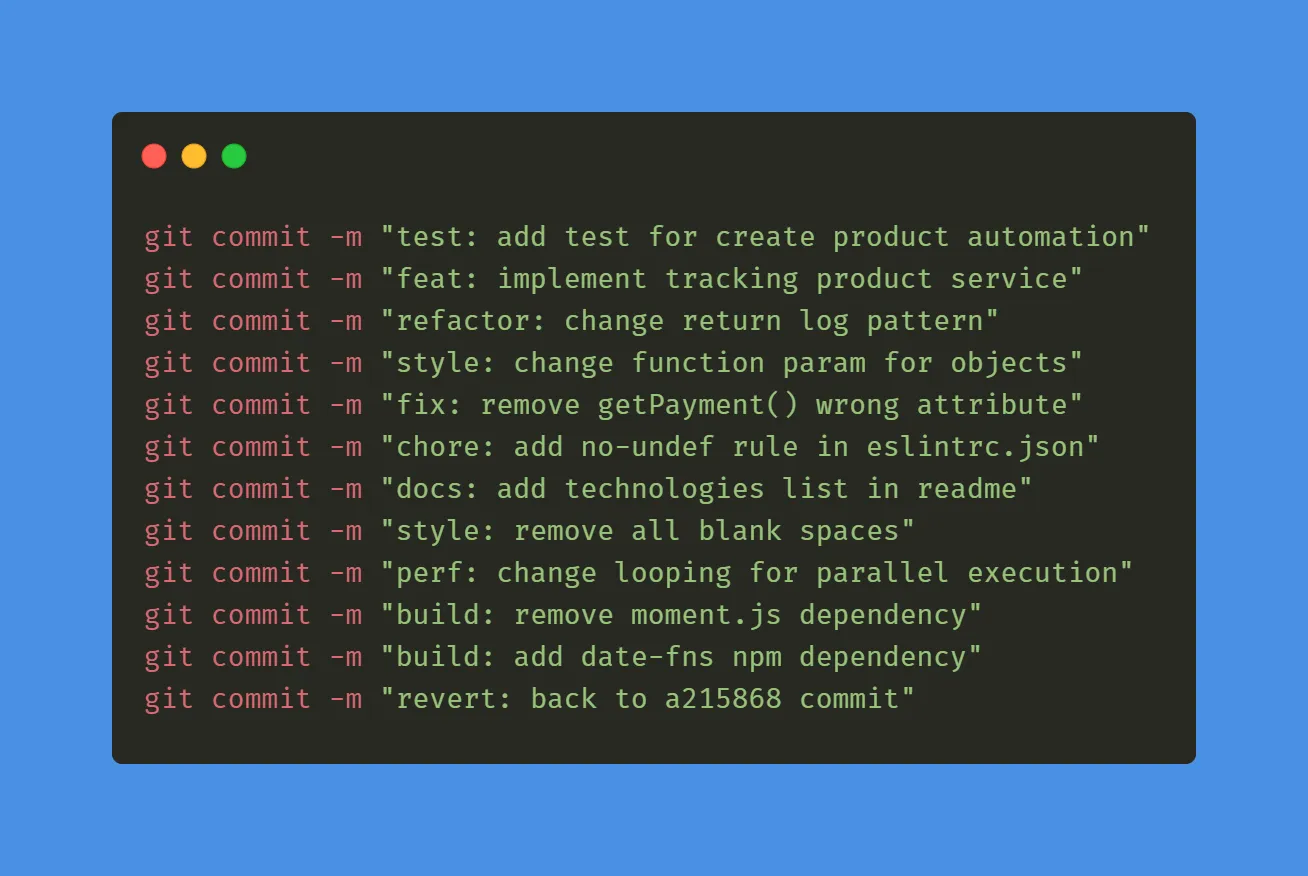

Where do software and applications fit in here?

Another key element of technological development that has enabled such relentless efficiency has been the application of better instrumentation, data collection, and analysis, which allows for better control and orchestration. This was illustrated by Google’s DeepMind AI, where the energy used for cooling was reduced in one of its data centers by around 40% in 2016, which represented a 15% reduction in energy consumption globally. This was achieved using historical data from data center sensors such as temperature, power, pump speeds, setpoints, etc., to improve the energy efficiency of data centers. The AI system predicted future data center temperature and pressure in the next hour and made recommendations to control consumption accordingly.

The development of data center infrastructure management (DCIM) systems has also continued apace, enabling AI integration to take advantage of all these hardware and infrastructure developments. These experiments are now features, allowing unprecedented visibility and control. For those designing new developments, software such as ETAP allows energy efficiency to be built into the design from the start while also accommodating microgrid architectures.

How will new data sources contribute to this?

The data explosion is expected to continue to increase through industrial IoT and 5G development, with the rise of general automation and autonomous vehicles as the driving factors. The data that will be generated, away from the centralized data infrastructure, must be treated, processed, and turned into intelligence quickly, as needed.

The new data architectures are expected to improve the efficiency of how all of this is handled. Computing development is seen as an important approach to managing more data that will be produced.

In one example, genomic research generates terabytes of data typically daily. Sending all that data to a centralized data center would be slow, bandwidth-intensive, and inefficient. The Wellcome Sanger Institute created a cutting-edge computing approach that allowed it to process data close to where it is produced, the genomic sequencers, with only the necessary centralization. This saves storage, bandwidth and speeds up data intelligence time.

Modular data centers, micro data centers, and better storage management will contribute to handling this developing wave efficiently, keeping the data center power consumption line steady in the future.

What effects will this have on the entire data ecosystem?

However, efficiency must extend not only to the supply chain but also to the entire life cycle. Suppliers and partners must all be involved to ensure that no part of the ecosystem is late in applying the tools to ensure efficiency. This applies to the design time of new equipment and applications and its useful life and efficiency. Understanding how the entire business ecosystem impacts the environment will be vital to truly achieving the goals.

Agreed standards, transparency, and measurability are vital factors in ensuring results.

These considerations are consolidating everywhere, and great efforts are being made to improve. Greater transparency is now accepted and embraced, with more and more organizations reporting on their progress.

Shared tools and processes

The data center sector will be useful for organizations and industries that want to follow the sustainability path toward increasing circularity. Expertise and efficiency, combined with the operations intelligence tools and commitments to tight targets for net-zero operations, can not only handle the world’s data explosion and digital demands but also do so sustainably at the same time, providing others with the tools and knowledge to do the same for their respective industries.

*The content of this article is the author’s responsibility and does not necessarily reflect the opinion of iMasters.

Leave a comment