One of the most common ways to cache Go applications is by using a map. If you already did this, you may have noticed a gradual increase in memory consumption, and that usually after a machine or pod restart it goes back to “normal”.

This happens because of the way the map works. Therefore, before seeing what we can do to solve this kind of problem, let’s understand the map better.

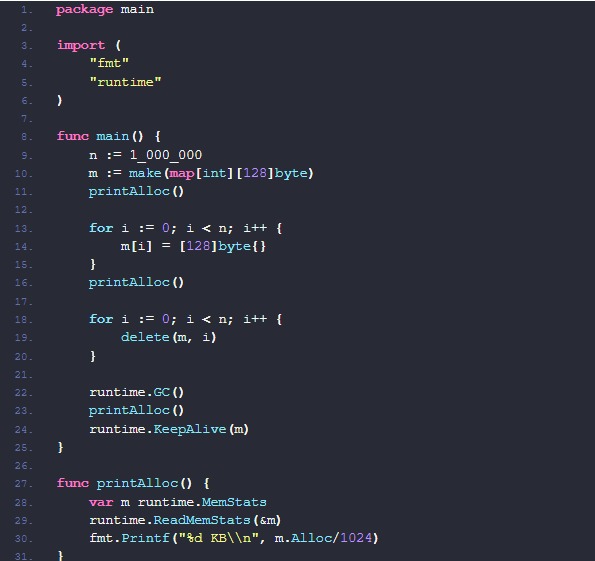

To exemplify the problem, consider a variable of type map[int][128]byte, which will be “loaded” with 1 million elements and which will then be removed.

Each call to the printAlloc() function will display the memory amount allocated to the variable m at that particular time.

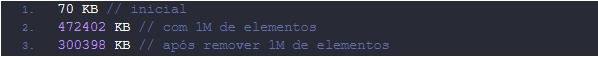

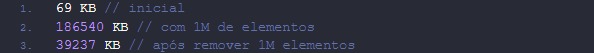

When running the above code, I got the following result.

Even after removing all map entries, the map size did not return to its initial size. Curious, don’t you think?

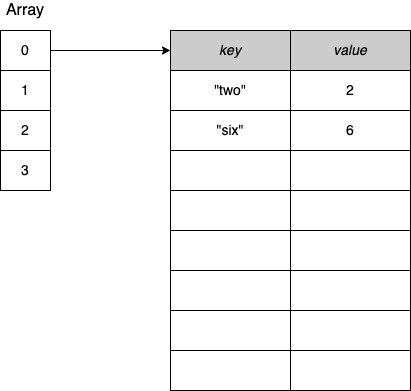

Well, this happens because, in Go, the implementation of maps uses the Hash Map data structure, an array where each position point to a bucket of objects’ key-value type.

Hash Table with focus on bucket 0

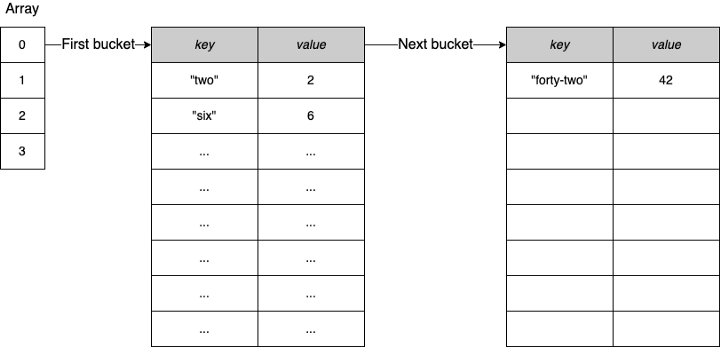

Each bucket contains a fixed-length array of 8 positions. When the array is full and Go needs to allocate a new item, a new array will be created and linked to the previous one.

In the struct runtime.hmap, which is the header of a map, among its many attributes, we have the B uint8 attribute. This attribute is responsible for managing the number of buckets that map has, following the 2^B rule.

After adding 1 million elements, its size will be 18 (2^18 = 262,133 buckets). However, when those same 1 million elements are removed, the value of B will still be 18.

This happens because the number of buckets in a map cannot be reduced. So, whenever we remove an item from a map, Go releases that slot to reuse but never decreases the total amount of slots.

Therefore, in a cache system made with map, the memory consumption may increase gradually.

To solve this problem, the best strategy is to create a new map from time to time and “migrate” the current cache data to this new map. After the “migration”, remove all items from the old map and let the Garbage Collector remove this map from memory.

However, if the complexity of this model is too great to be implemented in your system, a simple way to reduce consumption is to use the value of the map as a pointer (map[int]*[128]byte).

By making this simple change to the code we wrote at the beginning, the result of the execution was a reduction of approximately 87% in the map size after removing the elements.

HOWEVER… Before you change all your code to use pointers, it is worth mentioning that this change will only take effect if your elements or keys are smaller than 128 bytes since for elements/keys larger than that, Go will automatically store the object pointer and not their values.

By the way, if you want to learn more about Go, Kubernetes, Angular, Domain-Driven Design, Terraform, and gRPC, come and participate in the Learn Golang immersion. There are more than 240 recorded classes and 20 lives. For more information, visit https://aprendagolang.com.br/imersao.

See you next time.

Leave a comment